The Art of Image Processing: Mastering the Ultimate Techniques for Image Element Segmentation

1. Preface

Image element segmentation is a fundamental and important technique in image processing, involving the separation of targets or regions of interest from an image. This article will provide a detailed introduction to several common image element segmentation techniques, along with corresponding code implementations, flowcharts, and algorithm analyses.

2. Threshold Segmentation

Threshold segmentation is one of the simplest and most commonly used image segmentation methods. It is based on the grayscale values of the image and segments the image into foreground and background by setting one or more thresholds. The threshold can be global or local (adaptive threshold). Global threshold segmentation is suitable for images with high contrast between the foreground and background, while adaptive threshold segmentation can deal with uneven brightness in images.

2.1. Flowchart

[Read Image]

|

V

[Convert to Grayscale]

|

V

[Select Global Threshold]

|

V

[Apply Threshold Segmentation]

|

V

[Output Segmentation Result]

2.2. Threshold Segmentation Algorithm Analysis

Threshold segmentation is a method of segmenting an image based on its grayscale values. The core idea is to select a grayscale threshold and divide the image pixels into two parts: one part consists of pixels with grayscale values higher (or lower) than the threshold, usually considered the foreground; the other part consists of pixels with grayscale values lower (or higher) than the threshold, usually considered the background.

Specific Algorithms:

-

Global Threshold Segmentation: A global constant is chosen as the threshold, suitable for images where the foreground and background have high contrast and are evenly distributed.

-

Adaptive Threshold Segmentation: The threshold is dynamically adjusted based on the grayscale values of local areas of the image, suitable for images with inconsistent local contrast.

-

Otsu's Method: This method automatically determines the optimal threshold by analyzing the grayscale distribution of the entire image. It assumes that the image consists of a foreground and a background with bimodal grayscale distributions, and the goal is to find a threshold that minimizes the within-class variance of the foreground and background after segmentation.

2.3. Code Implementation (Python Example)

import cv2

import numpy as np

# Read the image

image = cv2.imread('image.jpg', 0)

# Apply global threshold

_, thresh = cv2.threshold(image, 127, 255, cv2.THRESH_BINARY)

# Display the image

cv2.imshow('Thresholded Image', thresh)

cv2.waitKey(0)

cv2.destroyAllWindows()

3. Region-Based Segmentation

Region-based segmentation methods segment an image based on the similarity between adjacent pixels, such as region growing and region merging. Region growing is a segmentation method based on similarity, starting from one or more seed points and merging neighboring pixels with similar attributes into the seed region. The key to region growing is defining the criteria for similarity and the conditions for stopping. This method is suitable for images where the object's interior color or texture is highly consistent.

3.1. Flowchart

[Read Image]

|

V

[Select Seed Points]

|

V

[Initialize List of Growth Points]

|

V

[Region Growing Iteration]

|

V

[Add New Growth Points if Criteria Met]

|

V

[Update Region Characteristics]

|

V

[Output Segmentation Result]

3.2. Analysis of Region-Based Segmentation Algorithms

Region-based segmentation methods rely on the similarity within image regions and the differences between regions. These methods typically start from one or more seed points and merge adjacent pixels into the corresponding region based on predefined criteria.

Specific Algorithms:

-

Region Growing: Starting from seed points, neighboring pixels similar to the seed points are merged into the region where the seed points are located. Similarity criteria can be based on attributes such as grayscale values, color, texture, etc.

-

Region Merging: Initially, each pixel is considered a region, and then neighboring regions with similar attributes are gradually merged until certain stopping criteria are met.

-

Watershed Algorithm: The gradient image of the image is viewed as a topographic map, where bright areas represent peaks and dark areas represent valleys. The algorithm simulates the process of water filling the valleys and gradually rising. When the waters from different valleys meet, watersheds are established as boundaries.

3.3. Code Implementation (Python Example)

import cv2

import numpy as np

def region_growing(img, seed):

neighbors = [(0, 1), (1, 0), (-1, 0), (0, -1)] # 4-connectivity

region_threshold = 10

region_size = 1

region_mean = float(img[seed])

# Initialize the list of growth points

growth_points = [seed]

# Mark array to indicate whether a pixel has been segmented

segmented = np.zeros_like(img, dtype=np.bool)

while len(growth_points) > 0:

new_points = []

for point in growth_points:

for neighbor in neighbors:

# Calculate the position of neighboring pixel

x_new = point[0] + neighbor[0]

y_new = point[1] + neighbor[1]

# Check if the neighboring pixel is outside the image boundaries

if x_new < 0 or y_new < 0 or x_new >= img.shape[0] or y_new >= img.shape[1]:

continue

# Check if the neighboring pixel has already been segmented

if segmented[x_new, y_new]:

continue

pixel_value = img[x_new, y_new]

if abs(pixel_value - region_mean) < region_threshold:

segmented[x_new, y_new] = True

region_size += 1

region_mean = (region_mean * (region_size - 1) + pixel_value) / region_size

new_points.append((x_new, y_new))

growth_points = new_points

return segmented

# Read the image

image = cv2.imread('image.jpg', 0)

# Set the seed point

seed_point = (100, 100)

# Apply the region growing algorithm

segmented_image = region_growing(image, seed_point)

# Display the image

cv2.imshow('Segmented Image', segmented_image.astype(np.uint8) * 255)

cv2.waitKey(0)

cv2.destroyAllWindows()

4. Edge Detection Segmentation

Edge detection techniques identify the boundaries of objects in an image by detecting points where there is a significant change in brightness. Common edge detection operators include Sobel, Canny, Prewitt, and Laplacian. Edge detection is often the first step in image segmentation, and the detected edges can be used for further segmentation tasks.

4.1. Flowchart

[Read Image]

|

V

[Convert to Grayscale]

|

V

[Apply Gaussian Blur]

|

V

[Compute Gradient and Direction]

|

V

[Non-Maximum Suppression]

|

V

[Double Threshold Detection and Edge Linking]

|

V

[Output Edge Detection Result]

4.2. Edge Detection Segmentation Algorithm Analysis

Edge detection segmentation relies on the information of object edges within the image. Edges typically correspond to significant changes in image brightness, which can be detected by examining the image's gradient.

Specific Algorithms:

-

Sobel Operator: Detects edges by approximating the gradient of the image in the horizontal and vertical directions through the calculation of first-order derivatives.

-

Canny Edge Detection: A multi-stage edge detection algorithm that includes Gaussian filtering, gradient calculation, non-maximum suppression, and double threshold edge linking. The Canny algorithm aims to find the optimal edge detection result, which means edges are clear and accurately located.

-

Laplacian Operator: Detects edges by calculating the second-order derivatives of the image, with edges corresponding to the zero-crossing points.

4.3. Code Implementation (Python Example)

import cv2

import numpy as np

# Read the image

image = cv2.imread('image.jpg', 0)

# Perform edge detection using the Canny algorithm

edges = cv2.Canny(image, 100, 200)

# Display the image

cv2.imshow('Edges', edges)

cv2.waitKey(0)

cv2.destroyAllWindows()

5. Graph-Based Segmentation

Graph-based segmentation methods, such as Graph Cut, utilize graph-theoretic models for image segmentation. They map the image onto a graph and then find the optimal segmentation by minimizing an energy function.

5.1. Flowchart

[Read Image]

|

V

[Initialize Graph Cut Model]

|

V

[Construct Graph Nodes and Edges]

|

V

[Define Energy Function]

|

V

[Minimize Energy Function]

|

V

[Output Segmentation Result]

5.2. Analysis of Graph-Based Segmentation Algorithms

Graph-based segmentation methods transform the image into a graph form, where the nodes of the graph represent pixels and the edges represent the relationships between pixels. By defining an energy function, the image segmentation problem is converted into a graph-cut problem that minimizes energy.

Specific Algorithms:

-

Graph Cut: An energy function is defined, including a data term and a smoothness term. The data term measures the compatibility of pixels with a certain label, and the smoothness term encourages neighboring pixels to have the same label. By minimizing the energy function, the image can be segmented into multiple regions.

-

GrabCut: An extension of Graph Cut, where the user only needs to roughly specify the foreground and background, and the algorithm automatically iterates to optimize and achieve a more precise segmentation result.

-

Normalized Cut: Building on the concept of Graph Cut, it takes into account the global information of the graph. By optimizing the criterion of the normalized cut, it ensures that the segmented subsets are tightly connected internally and loosely connected externally.

5.3. Code Implementation (Python Example)

import cv2

import numpy as np

from skimage import segmentation

# Read the image

image = cv2.imread('image.jpg')

# Apply graph cut algorithm

labels = segmentation.slic(image, compactness=30, n_segments=400)

segmented_image = segmentation.mark_boundaries(image, labels)

# Display the image

cv2.imshow('Segmented Image', segmented_image)

cv2.waitKey(0)

cv2.destroyAllWindows()

6. Tool Recommendation

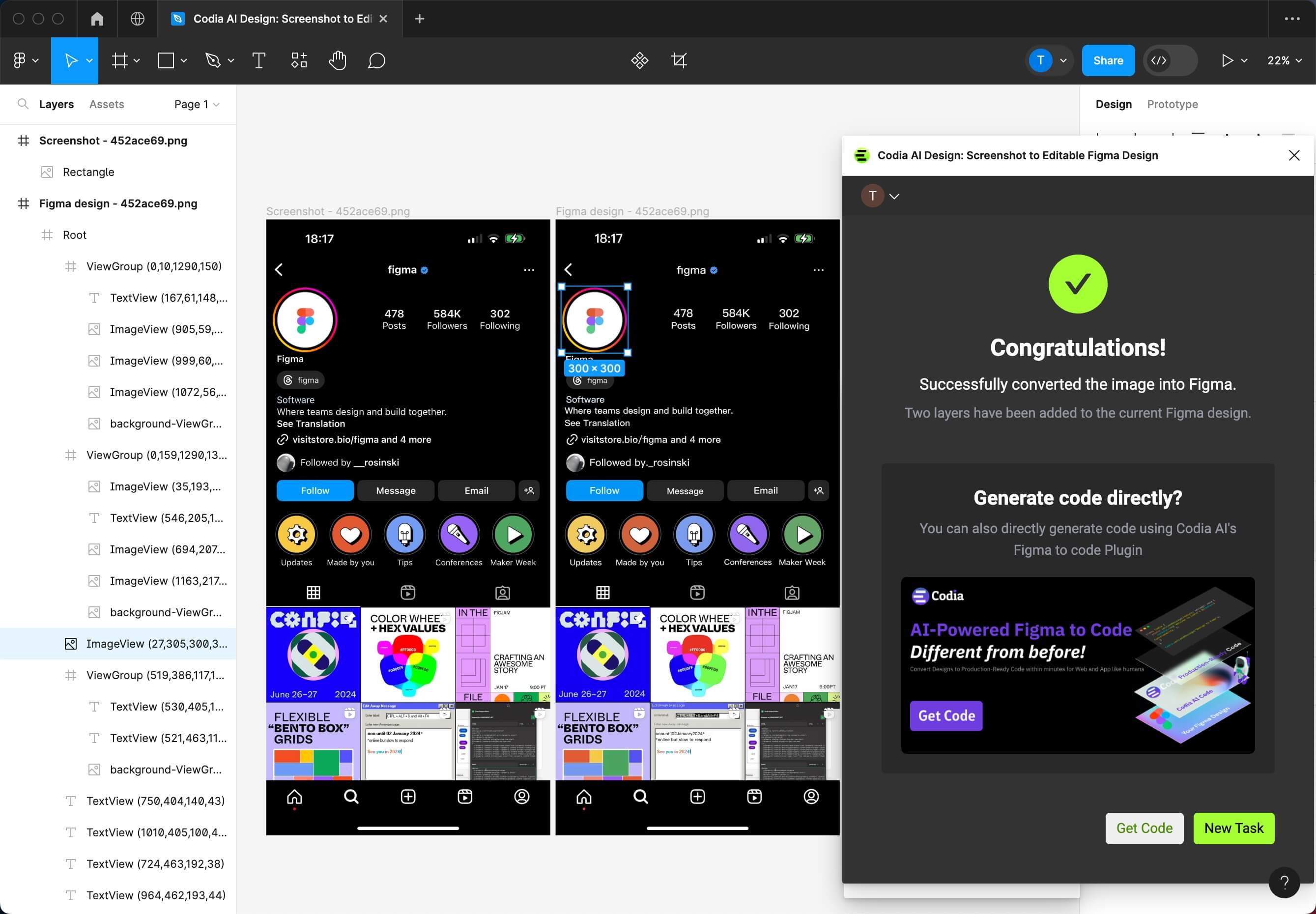

I recently discovered a tool called Codia AI Design. It can not only perfectly restore images to Figma design drafts, but also excellently slice designs, allowing you to access the images and text resources you want anytime and anywhere. The experience is as shown in the following image: